The Singularity: A Proof

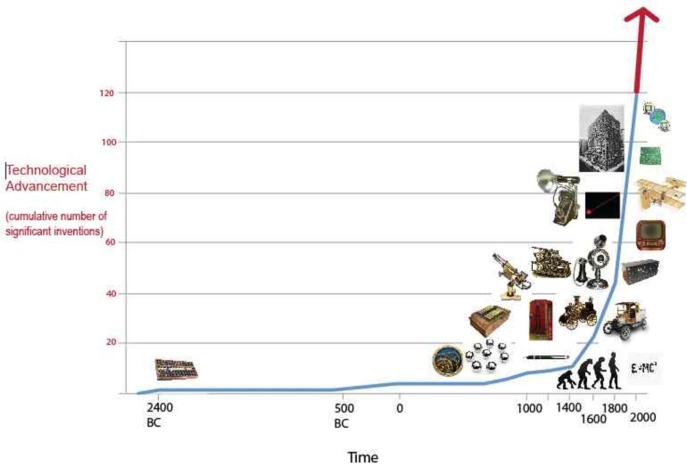

The current belief about technological improvements is that they exist on a linear scale. That if all the major inventions in human history were to be plotted on a graph relative to the year they were invented, the line connecting those plots would be straight. Technological advancement, most think, is a gradual climb upwards.

However, observations made in the last fifty years contradict the idea of a gradual technological advancement, substituting instead the belief that technology improves exponentially over time—that if all the major inventions in human history were plotted on a graph relative to the year they were invented, the line would curve upwards at an exceedingly sharper rate.

In 1965 Gordon Moore, the co-founder of Intel, observed that the number of transistors on an integrated circuit doubles every two years. This observation led him to predict that each generation of computers would grow smaller while also becoming more powerful.

Doesn’t sound like a big deal? It is.

His observation, known as Moore’s Law, has been used for fifty years to predict the future processing power of any technology involving integrated circuits, and is the basis for why computers improve at such a surprising rate year to year.

This is how Steve Jobs was able to leave plans for iPhone technology for four years after his death.

It’s how you can be certain that within a year that awesome new phone you bought will be an outdated hunk of junk. Or, at least, not nearly as feature savvy as the newer version.

Moore’s Law is one theory behind why computation is expanding at such an amazing rate compared to inventions from previous generations (imagine the Europeans having to invest in new sets of catapults every year back in the middle ages. That would have made killing people very expensive!).

Some believe Moore’s Law will continue forever, resulting in better integrated circuit technology year after year, forever. Unfortunately, all technologies have their limits, and in 2013, Intel’s former Chief Architect Bob Colwell predicted “2020 as the earliest date we could call [Moore’s Law] dead.” Gordon Moore himself predicted the death of his law back in 2005 saying that transistor technology “can’t continue forever. The nature of exponentials is that you push them out and eventually disaster happens.”

The problem with integrated circuit technology has a lot to do with that chubby bunny game you might have played when you were younger. Remember that? The one where you would stuff as many marshmallows in your mouth as possible because you were young and “Unhealthy” was the best lifestyle?

Inevitably, what would happen is you could only jam so many marshmallows into your mouth before your attempt to say “chubby bunny” sounded more like a groan for help than a happy, sugar rushed youngster playing a fun game. At that point stuffing more marshmallows in your mouth became impossible, or, at least, pointless…and slightly dangerous.

The same is the case with integrated circuits: you can only limit the space and size between transistors on the circuit by so much before you can’t possibly make them any smaller. It’s a physics thing. Today, industry professionals are already predicting a massive decrease in the exponential trend of computer advancements after 2020 due to the amount of space between transistors on an integrated circuit reaching an unbreachable cap.

Because of this, the internet is screaming the coming of an economic disaster as computation and processing level out, and the cost to create smaller and smaller integrated circuits becomes enormous.

Think of it as a “computationocalypse.” The day when you buy a cell phone and after four years it’s still the most advanced cell phone on the market (which might be nice for the older folks).

Luckily for the younger generations, these fears may very well be misplaced.

Sorry grandma!

Enter the five paradigms of computing technology.

In this setting a paradigm is a medium through which a method or intellectual process takes place. In the life of computational technologies five paradigms have so far existed, with Moore’s observation about integrated circuits existing in the fifth paradigm.

As you can see in the above chart, before integrated circuits there existed discrete transistors, and before discrete transistors there existed vacuum tubes, and before that relays, and even before that the electromechanical.

The advent of each new paradigm in computation resulted in a paradigm shift. A paradigm shift is described by Ray Kurzweil as a major change in method and intellectual process in order to accomplish a task. As each computing technology began to lose its utility a new technology was created to replace it. Basically, once each technology reached near to its maximum computational power a better technology was invented to move above the previous technology’s threshold.

So, if past events are any indication of the future, a new technology should rise to replace the integrated circuit as the integrated circuit is losing steam, and those crying out COMPUTATIONOCALYPSE! today will read, in a few years, like that 1949 Popular Mechanics quote:

“Computers of the future may weigh no more than 1.5 tons.”

Already, predictions are being made regarding the sixth paradigm of computing, such as carbon nanotubes or quantum computation. So although integrated circuits are reaching their end, we are by no means reaching an end in computational advancement.

But wait! There’s Moore!

Moore’s Law was actually an observation of a much larger trend coined by Ray Kurzweil as the law of accelerating returns. The law of accelerating returns (LOAR), as described by Kurzweil, “describes the acceleration of the pace of and the exponential growth of the products of an evolutionary process.” It is the idea that the improvements made on a technology (and successive adoption of the technology by the public) speed up over time.

In the case of computer engineering, computational components evolved from relays to vacuum tubes and eventually to integrated circuits. During this evolutionary process, the power of the technology continued to grow exponentially despite changes in the medium (refer back to the above graph), while also becoming more economically affordable, resulting in public accessibility.

Another example of LOAR exists in the phone industry. Almost fifty years after the invention of the telephone in 1890, phone technology was widely adapted by the public. In comparison, it took only ten years, a fifth of the time, for the mobile cell phone to reach a wide audience: In 1990 there were less than ten million cell phone subscribers in the U.S., and by 2000 that number had reached over 100 million (1). Then, in 2007, Apple introduced the first mass consumer market smartphone. Within four years “35% of American adults had a smartphone.”

The adoption of phone technology lives on an exponential scale, just like the power of the phone technology itself, which moved from landline phones with one function–connecting with other phones–to smartphones with ever increasing web surfing and connection functions.

The law of accelerating returns is the premise behind the feasibility of an approaching singularity. Based off this exponential trend,

“we won’t experience 100 years of progress in the 21st century — it will be more like 20,000 years of progress (at today’s rate).” –Ray Kurzweil

It is an ever growing belief among those versed in the topic of futurism that the majority of today’s population will be alive to witness the extraordinary new era of human existence known as the singularity. We continue to make exponential gains, year after year, with every technology we create, and these gains show no signs of slowing down.

The ultimate result will be a world with technological capabilities we can only dream of today. Capabilities that will forever change the course of human history. This is evidence of that unlimited potential of the singularity.